How Mid-Market CEOs Can Deploy AI at Lightning Speed (Without Cutting Corners).

An interview with Roja Buck, Founding Partner at Rational Partners, on navigating AI adoption without creating compliance nightmares.

An interview with Roja Buck, Founding Partner at Rational Partners, on navigating AI adoption without creating compliance nightmares.

The pressure is real. Your board wants AI implemented yesterday, your employees are already using ChatGPT for work, and your competitors claim they're getting massive productivity gains. As CEO, you're caught between a rock and a hard place: the business demands innovation, but you can't ignore compliance reality. How do you move fast without creating a compliance disaster?

We sat down with Roja Buck, founding partner at Rational Partners, to discuss a practical framework for AI deployment that gives you a way to have productive conversations with your CTO and operating partners about the trade-offs at each level.

The Shadow IT Problem Is Already Here

What's the biggest risk CEOs are missing when it comes to AI adoption?

Roja: The breach, for many, has already happened. The second your employees send personally identifiable information to a third-party AI processor, you're in breach of GDPR, HIPAA, or whatever compliance framework applies to your industry.

I was just working with a healthcare client who spent months evaluating customer service platforms. They did thorough due diligence on data storage, security, compliance – everything. But nobody asked where the AI processing was happening. For the platform that had made it through to the end of the procurement process, if they'd used it, all the data would have gone to a third-party AI vendor's servers in the US, breaching multiple data protection and sovereignty rules.

So this isn't a future problem – it's happening now?

Roja: Absolutely. Every business with employees using AI tools is potentially already in breach. Before GDPR, there was widespread naivety about data protection. We're in that same phase with AI now. Eventually, there will be a big public breach, followed by regulatory action, and everyone will wake up. Despite good intent, personal information will be trained on and become entangled deep within the knowledge of those systems — it's then only a matter of time before it ends up in the public domain and nearly impossible to trace or expunge. It's the Wild West.

A Framework for Thinking About AI Security

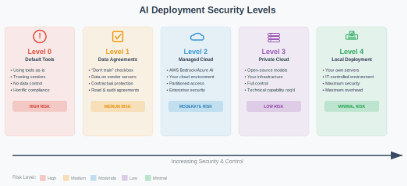

You've developed a simple five-level framework for AI deployment. Can you walk us through it?

Roja: Think of it like a technology readiness level for AI security rather than a framework. It is a way of helping to frame and think about the problem — a pragmatic and actionable approach to leveling up. Each step up gives you more protection but requires more investment and increases operational burden.

Level 0 is what most companies are doing now – using tools as they come, trusting vendors not to misuse data. It's considered breaching from a compliance perspective, thoughtless towards those whose data is being distributed, but it's incredibly common.

Level 1 is where you tick the "don't train on my data" box with providers like Anthropic or OpenAI, or where you put an explicit no-training agreement in place with a provider. Your data still sits on their servers, but they agree not to train models on it. It's similar to a data sharing agreement you tend not to read, audit, and enforce.

Level 2 is managed environments like AWS Bedrock or Azure AI. You're running models in a cloud environment with enterprise-grade security under the agreements you already have in place with those providers. The provider gives you a partitioned environment that only you can access with static models and no pass-back of information to the model's producer.

Level 3 moves to private cloud deployment where you're running open-source or company-built models on a strictly partitioned — though multi-tenant — infrastructure, giving you full control of where the data is processed, the explicit models used, and total visibility of where the data travels and resides. No longer an off-the-shelf service, instead requiring more technical capability akin to the skills seen in moderate maturity technology teams.

Level 4 is self-provisioned deployment with your own hardware and IT-controlled environment. Similar to Level 3 but with single tenant ownership and physical separation and controls. Maximum security, maximum control, maximum overhead.

The Practical Reality for Mid-Market Companies

For a 200-300 person company, what's realistic?

Roja: Here's the real challenge – you're not dealing with one AI tool. Your developers want GitHub Copilot for coding, marketing needs Jasper for copywriting, sales wants Gong for call analysis, executives use Otter for meeting notes, and someone's inevitably building presentations with Gamma. Each tool has its own data processing agreement, its own security model, its own compliance requirements.

The harsh reality? For most of these specialised tools, you're stuck at Level 1 – ticking the "don't train on my data" box and hoping for the best. You can't easily recreate GitHub Copilot's coding intelligence or Gong's sales analytics in your own infrastructure without losing much of the functionality that makes them valuable. This may change as the self-hosting solutions mature but it is likely 12 months into the future at a minimum.

This is where IT governance becomes critical. You need a centralised process for evaluating these tools, standardising data agreements, and ensuring consistent security policies across your entire AI stack.

The Build vs. Buy Dilemma

How should CEOs think about the build vs. buy decision with AI?

Roja: This is where many companies get stuck. You evaluate these AI-powered solutions thinking you only need to build "this bit" – the AI part. But there's a huge iceberg of other functionality you also need to recreate.

For an education client, the decision became: use the customer service platform but filter out all personally identifiable information going into it. You lose specificity and much of the value, but you stay compliant.

The alternative is building custom tooling that integrates with a Level 2+ provider and then attempting to implement all the functionality that the service provider has implemented. Unless you have significant IT resources, that's often not realistic for mid-market companies.

We fortunately identified a "smart" approach, wrapping the third-party system in a custom anonymisation layer. But even this, despite being much simpler than building a whole tool in-house, required investment and proactive engagement in AI security across multiple departments.

Making the Decision

What's your advice for CEOs facing this decision?

Roja: Start with the fundamentals. What's the single biggest problem this AI tool could solve for you? Work backwards from there. Don't let the hammer (AI) convince you that everything is a nail.

Most importantly, involve your data protection officer or equivalent early. Ask the right compliance questions upfront, not after you've already signed the contract. The questions to ask are:

- Where is data processing happening?

- What data security and provisioning is in place?

- Do we have clear agreements about data usage and training?

- Does this comply with our existing regulatory requirements?

Any final thoughts for CEOs?

Roja: The reality is you're sometimes going to be between a rock and a hard place. The business demands innovation, but you can't ignore the compliance reality. The framework gives you a way to have productive conversations with your CTO and operating partners about the trade-offs at each level — potentially triggering the creation of a novel or hybrid approach but one which at a minimum should keep your, and your customers' data safe.

Don't wait for the perfect enterprise AI strategy – that just encourages more shadow IT. But don't ignore the risks either. Start with Level 1 or 2, get comfortable with the technology, and evolve your approach as your needs and capabilities grow.

Navigating AI adoption in your business?

Rational Partners helps mid-market companies balance AI innovation with compliance reality. Let's talk.